This article is about our final year project which dates back to 2015 AD, to re-identify people inside a camera network. Basically at the end of the day if you point a person in the CCTV and ask where did that person go it will generate a video of the person wandering around.

Okays not as worse as the sponge bob re-identification. But yea it works. And yes the system runs real time too.

The following video shows the system running. That’s a very long video I would suggest to keep on reading. 😀

The video below shows the final footage of the re-identified people. The system re-identifies most of the people. In some scenarios, it identifies people with the same color dress which appear in the same time frame. (The last person in the video footage with black color t-shirt)

Lets get in to some technical details shall we ?

1. So how do we detect the humans in the CCTV footage?

Before we try to detect the human we need to figure out the moving parts. For that, we used the background subtraction technique. So basically we take several continuous frames and analyze what are the things which are moving and what are the things which stay still. This helps us to come up with a rectangular area which includes the moving object. (blobs)

2. What will be the issues when detecting the moving objects inside a camera network?

Yes the opening of the doors (rotating fans, moving shadows) will be also captured as the foreground.

This is another problem where a group of people will be identified as one single blob.

As we can see, as the persons dress or skin color almost matches the floor color it classifies those parts as the background too. So we might get moving blobs with missing body parts.

3. How do we eliminate these non-human blobs?

Train a support vector machine.

The below image shows how a person is detected with the help of SVM. (Please do refer the documentation for more info)

4. Now we have identified the moving objects, now how do we re-identify a person?

Easy , face recognition. Hmm, that’s what we thought also. 😀

But because of the very low video quality of the footage, it is infeasible to do face recognition. So we used the Network graph and color moments to re-identify a person. When a person(blob) leaves a camera node the identified profile with the color moments will be sent to the adjacent nodes. And if a person appears in the next adjacent camera with a similar profile within the time limit then the person will be given the same profile.

5. Okay, so what is this color moment that we are talking about?

Utilizing Body Proportions to define regions in the image and extract the color features in that area. (HSV color model)

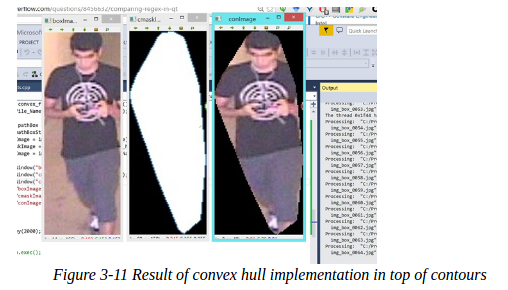

6. Okay, but when extracting the color in the rectangular area how can remove the non-human part in the rectangular area?

Simple, use a convex hull to remove the background from the rectangular area. Please do refer the documentation for implementation details.

7. As CCTV cameras have very low resolution and quality how to process the video?

Use a noise removal technique on the videos. We used a bilateral filter on the video frames. The image below shows an edge detection done on the sample low quality the first one without any enhancements and the second one with a bilateral filter. We can clearly see in the second image the edges are more clear and easier to segment.

8. What were some interesting problems that were faced ?

OH My god, the Mirrors. Not the Justin Timberlake’s mirror, but the usual mirror. In some footages, there are mirror that reflect the person in front. And those mirror images also started to get classified as humans and profiled for re-identification.

As you can see from the video there is a green line marked. If a blob is detected completely inside this marked region then it will be removed and will not be considered as a valid profile.

9. Why use a GPU is it a lame reason to play games?

Okay, we admit it, we played Bio Shock, GTA and all sorts of things. But we did that only for the purpose of testing the GPU. 😀 .And after 2 months we were convinced that was a good GPU.

And the second reason is with all the above-mentioned computation it is very hard to run more than 4 videos parallel in a CPU (Desktop i5). So we ended up parallelizing the several components of the system and compiled the open-cv to support CUDA to enhance the system.

The above video shows the same system running only with the CPU (4 cores) to show the performance gain. The system struggles to run in real time and crashes in a few seconds and the CPU usage reaches the full utilization level.

10. What are the lessons we learned?

Never use c++. Unless you have a c++ expert in your team. Period. 😀

11. Why is the UI so crappy?

To this question I like to quote the Nokia CEO.

Okays we accept it, we failed. But before you judge us you need to know that there are so many things going inside the UI for showing the videos parallel. Feed synchronization has been done to show the videos in parallel.

Although the videos are processed parallelly the CPU is used to show the feed, so some videos(less complex videos without many humans) process fast and some take time to process, so we needed to synchronize and there started the race condition and all sort of things. (All the videos must start processing at the same time and all should wait until the last video frame gets processed and move to the other one)

12. How can we improve the system to achieve more than 80% accuracy?

Hire a CCTV operator.

Okay, jokes apart. The color moment can be improved. For example we can use watershed algorithm etc. This enhances the re-identification capability of the system.

13. What are some of the challenges observed?

- Very low lighting conditions.

- The camera network is different for every building

14. So what is different from other related projects?

There are different implementations. This is our approach by using color moments, without going to rich features and network graph which runs in real time with the utilization of the GPU. We were able to run up to 28 videos parallel without a single delay.

Limitations.

- We do not provide re-identification for aerial views. It’s very hard to compare bald heads. 😀 . Planning to do a thesis in this domain. 😀

- The system does not support multiple levels of footage. (1st floor, 2nd floor etc)

- The system does not support overlapping video footage.

Technologies used.

- C ++

- Open CV for image processing

- CUDA for parallel processing

- QT for UI

- Visual Studio for code editing

Project Link – Google doc link