Neural networks have been a hot topic after the surfacing of deep learning. In these series of tutorials we will go in depth into building complex networks with Keras and Tensor Flow.

TensorFlow is an open-source library for machine learning introduced by Google. Keras provides a high level api/wrapper around TensorFlow.

In this tutorial we’ll look into building a very simple neural network in Keras and use Tensor Flow as the backend. You can follow the installation of Tensorflow in this page. Using the virtualenv is recommended to minimize dependency and version conflicts.

For this tutorial we will implement a simple XOR (exclusive OR) logic in a Neural Network. XOR is an interesting thing because it is not a linearly separable problem. We cannot draw a single linearly separable line between these nodes. For this let’s look at a very simple example. As you are a shop owner you have 4 customer segments and you want a method to segment these customer. For your business high earning old people and middle earning young people are the most loyal customers and you are planning to provide some discounts for these segments. So is there a way we can draw a single line to divide the high return customers and low return customers. This is exactly the same scenario in XOR.

So how can we solve this with Neural Network (NN). Let’s start by importing the packages we need to build the NN.

The python environment is good with scientific computing and math related work. So we import numpy. Numpy is a python library which makes array manipulations very easy and keras internally supports numpy arrays as inputs.

There are two different API’s provided by Keras, which are Functional and Sequential models. We will import the simplest model which is the sequential model. This can be seen as a linear stack of layers. These Neural networks consist of different layers where input data flows through and gets transformed on its way. There are several layers provided by Keras (Dense , Dropout and merge layers). These different types of layer help us to model individual kinds of neural nets for various machine learning tasks. In our scenario we’ll be needing only the Dense layer.

The initial input will be created as a two dimensional array. The target (output) array is also two dimensional but contains just a single output.

| Input | Input | Output |

| Old [0] | Middle Income [0] | Non Loyal Customers [0] |

| Young [1] | Middle Income [0] | Loyal Customers [1] |

| Old [0] | High Income [1] | Loyal Customers [1] |

| Young [1] | High Income [1] | Non Loyal Customer [0] |

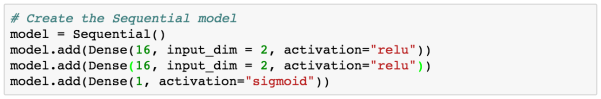

Now the interesting part begins, creating the model.

As mentioned before we’ll be using the dense layer. The first parameter states the number of neurons in that given layer and the second parameter states the number of inputs given to the model. Lets not get too much worried about this activation function, will talk about this in a while. After this we add a second layer which has only one neuron. Have you noticed that input dimension is not stated in the second layer , because it internally takes the 1st layer as the input to the final layer.

In this we have used two types of activation functions which is ReLU (Rectified linear unit) and a Sigmoid function. Just like our neurons in our head these neurons also needs to be activated to pass the message to next neuron. Similar to that these activation functions also has some threshold and when it meets the threshold it fires(activates) the neuron.

The last thing to do is to compile the model. In order to compile we need two things. In order to the NN to set the correct weights to the model we need to tell the model how well is it performing. This is knows as the loss (how bad it performed). To this we will pick mean_squared_error as the loss function.

The second factor is the optimizing function. The job for the optimization function is to find the right numbers to the weights and thus reducing the loss. For the optimization we will use something called adam optimizer.

You might ask are these the only loss or optimization function I can use. No ! . Even if we add binary_crossentropy as the error function the NN will work without a hassle. But that does not mean all the error functions can be used interchangeably. A process of trial and error should be done master which fits in to which scenarios.

The third parameter is to measure the accuracy. In here we’ll use binary_accuracy to calculate the accuracy of the predictions.

Finally we start the training process by calling the fit function. Now we can see in 54th iteration we are getting the 100% accuracy. If we run it several times the convergence number will slightly change because the weights are initialized randomly for the first iteration.

Tweaking the model.

Okay we have built a very simple neural network so can we tweak it. We can visualize what we have built is some thing similar to the below image. So what are the things we can change in this neural network ?

Increasing the number of hidden layers

Let’s try with adding another hidden layer and check if we get to 100% accuracy very quickly. So how do we add a new layer ? . Simple we just append another single line to the model.

The results are really good. We were able to get 100% with the 11th Iteration it self.

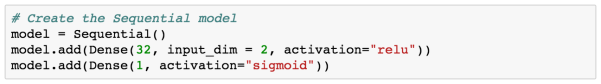

Now lets have only one hidden layer and increase the number of neurons in the first layer and see whether the accuracy increase.

Oh ! this has increased the iteration count to reach 100% accuracy. We saw previously with 16 neurons we were able to converge in the 55th interactions , but now it took 86 iterations to converge with 32 neurons in a layer. So increasing the number of neurons does not always yield in good results.

Through these tutorial I basically wanted to convey the message that, we don’t have to be a mathematician in order to build machine learning models or do machine learning. A basic understanding of how things works would be enough to get you started. In contrast for machine learning theory you’ll be needing a more in depth math knowledge. Lets see you in the next tutorial and solve some more interesting problems.